Abstract

Peer review is a cornerstone of academic publishing, ensuring the integrity, novelty, and accuracy of published research. However, peer review faces challenges such as reviewer biases, inconsistent assessments, and concerns regarding the design of review mechanisms. These issues can obscure the fairness and integrity of scientific evaluation, especially given the increasing volume of academic submissions.

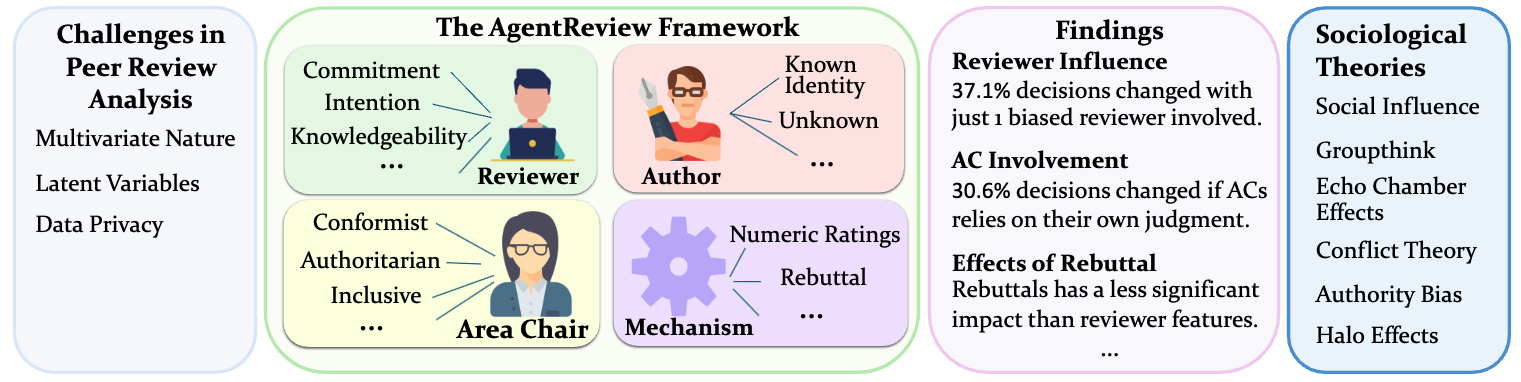

To address these issues, traditional studies in peer review often focus on statistical analyses of past reviews, which struggle to fully capture the multivariate nature of peer review -- entangled factors like reviewer expertise, motivation, and bias contribute jointly to the review results, making them difficult to study in isolation. Moreover, ethical and privacy issues around investigating real-world peer review data further complicate the exploration of real-world peer review data.

In our recent work, accepted as a main track (Oral) paper at EMNLP 2024, we introduce AgentReview, the first large language model (LLM)-based framework designed to simulate the peer review process. AgentReview allows for the controlled simulation of peer review dynamics using LLM agents, enabling researchers to explore biases, reviewer roles, and decision mechanisms in a way that respects privacy while providing actionable insights into how the peer review process can be improved.

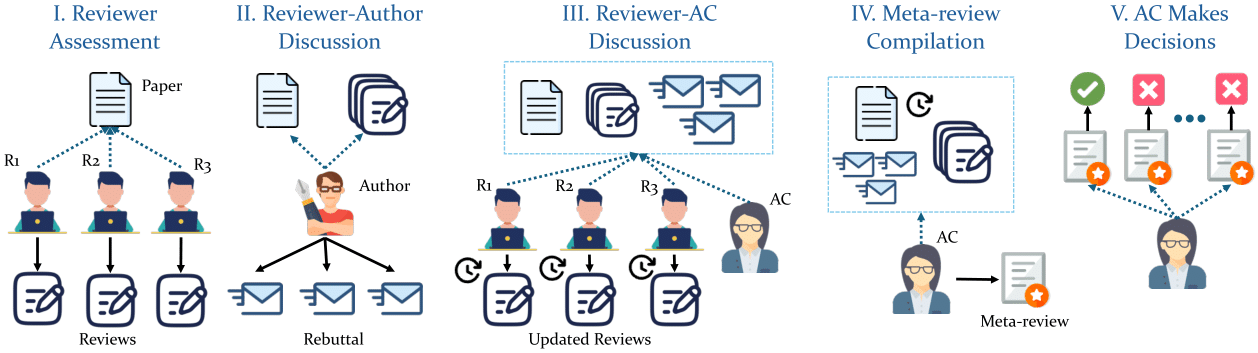

Figure 1: AgentReview is an open and flexible framework designed to realistically simulate the peer review process. It enables controlled experiments to disentangle multiple variables in peer review, allowing for an in-depth examination of their effects on review outcomes.